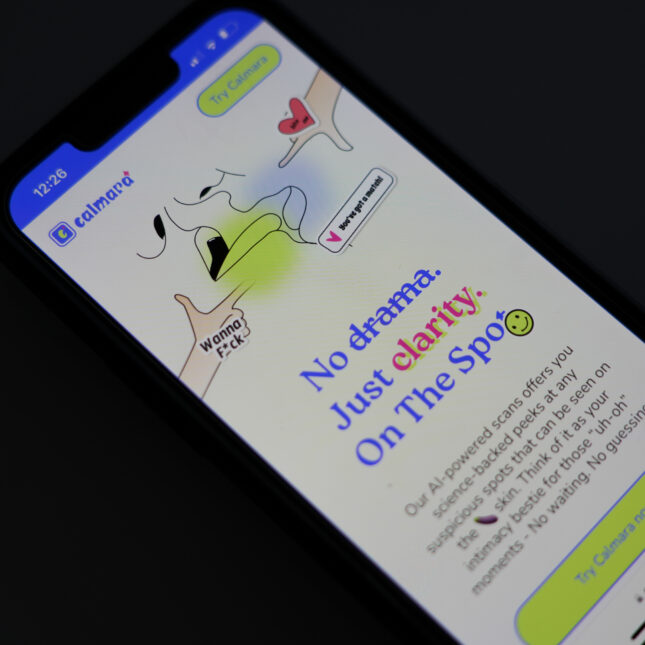

The company that claimed its AI model could identify sexually transmitted infections from a single penis picture was shut down by the Federal Trade Commission in July.

STAT wrote about the app, Calmara, and the immediate critical response it drew from patient advocates, doctors, and privacy experts in April. The founders did not adequately explain how they would protect sensitive user information and prevent minors from accessing the app. They also had little proof that the tool actually worked, with no peer-reviewed studies or clearance from the Food and Drug Administration.

The FTC hammered on Calmara’s lack of scientific evidence in its letter to Calmara’s parent company, called HeHealth. Specifically, the consumer protection agency pointed out that the data used to train the company’s AI model included photos from people who had never received a confirmatory diagnostic test. The data were also limited, and four out of five authors of the study were paid by HeHealth.

This article is exclusive to STAT+ subscribers

Unlock this article — and get additional analysis of the technologies disrupting health care — by subscribing to STAT+.

Already have an account? Log in

To submit a correction request, please visit our Contact Us page.